Research

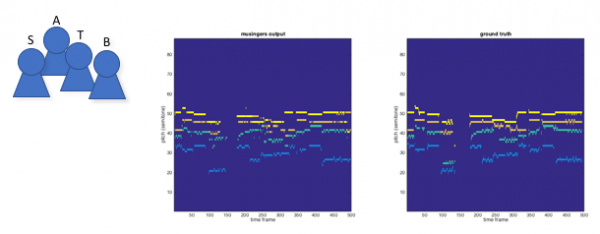

MULTI-PITCH DETECTION AND VOICE ASSIGNMENT FOR A CAPPELLA RECORDINGS OF MULTIPLE SINGERS

This project presents a multi-pitch detection and voice assignment method applied to audio recordings containing a cappella performances with multiple singers. A novel approach combining an acoustic model for multi-pitch detection and a music language model for voice separation and assignment is proposed. See theproject page for source code and related publications.

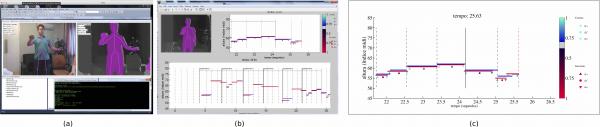

AUTOMATIC SOLFÈGE ASSESSMENT

This project presents an audiovisual approach for automatic solfège assessment. More information at the specific project page.

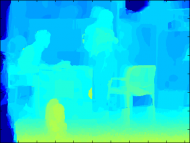

TEMPORALLY COHERENT STEREO MATCHING USING KINEMATIC CONSTRAINTS

This technique explores a simple yet effective way to generate temporally coherent disparity maps from binocular video sequences based on kinematic constraints. More information at the specific project page.

MOCAP TOOLBOX EXTENSION

Periodic quantity of motion by Rodrigo Schramm (UFRGS) & Federico Visi (Plymouth University). You need MoCap Toolbox to run the functions. See the Manual and the function help how to use it. Download (zip, 1.1 mb).

The MoCap Toolbox is a Matlab® toolbox that contains functions for the analysis and visualization of motion capture data. It has been developed by Petri Toiviainen (Professor) and Birgitta Burger (postdoc) and can be downloaded at University of Jyväskylä/Finland website.

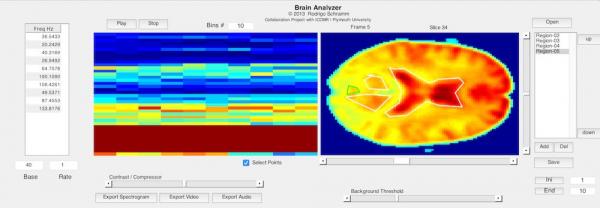

BRAIN SONIFICATION

Matlab toolbox for MRI feature extraction and sonification.

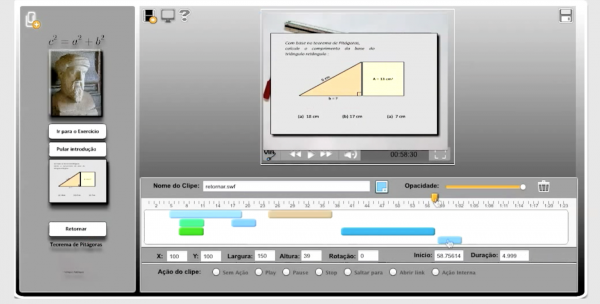

INTERACTIVE VIDEOS

Web-based multimedia tool for fast interactive video production.

Prototype software: http://caef.ufrgs.br/~schramm/via/ (site in Portuguese).

RECTANGLE/PARALLELOGRAM DETECTION

Please go to Cláudio Jung's research page for more details, and MATLAB code

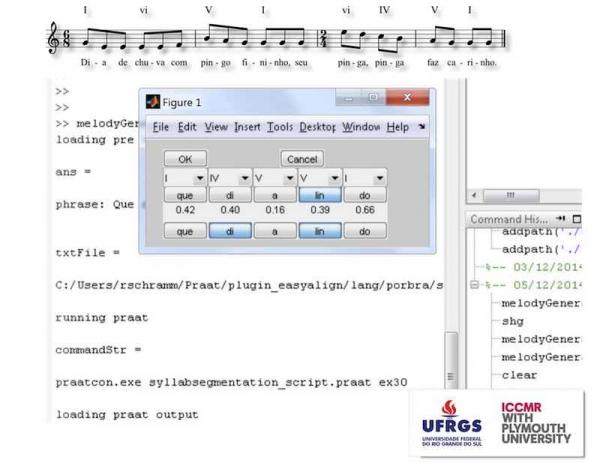

AUTOMATIC SONG COMPOSITION

A tool to generate sets of rhythmic-melodic structures that arise from the expressive reading of a text. This system is designed to aid the process of song composition, extracting the Portuguese prosody from the reading of the lyrics and applying an algorithm for melodic generation and rhythmic shaping.

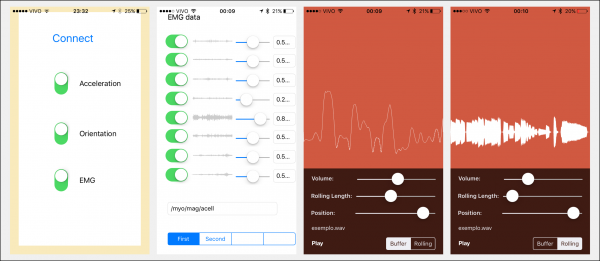

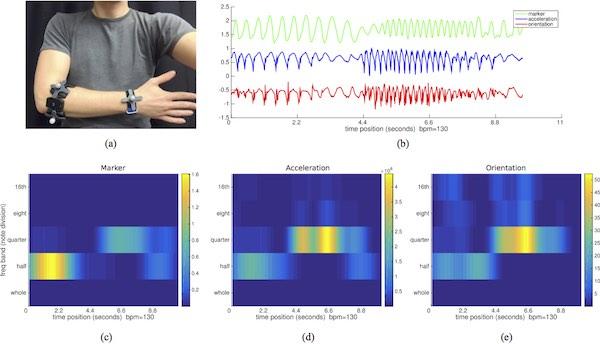

GESTURE & AUDIO ANALYSIS FROM MUSIC PERFORMANCES WITH MOBILE DEVICES

This experimental project integrates armband sensors (accelerometer, gyroscope, electromyogram) with mobile devices. Extracted features from audio and gesture are used to manipulate and transform sounds through custom VST and AudioUnits components. This project is a collaboration effort with Federico Visi (Plymouth University).